This post details the process of creating Nettle, a challenging word game based on the relations between the meanings of words.

Background

In 2021 Josh Wardle’s game Wordle became a viral hit. I expect most readers are probably already familiar with the game, in case you aren’t the gist of it is this: you get 6 guesses to find a 5 letter word. Every guess must itself be a real US English word. In response to each guess, the game will tell you whether each letter in your guess is in the correct position, is in the target word but in a different position, or is not in the target word. There’s a new target word every day, and all players share the same target word.

It’s a clever concept: The daily nature of the game keeps people coming back, instead of bingeing 20 puzzles and getting bored. Everyone getting the same word encourages friends to compete with each other. And, perhaps most cleverly, the game will create a spoiler-free record of your performance using a bunch of emojis, which can easily be pasted into any modern messaging application, again encouraging friends to compete. This helped the game spread virally, and also helped to keep people interested by having friends organically remind each other to complete each day’s puzzle.

One of the strengths of Wordle is its short nature. Completing one puzzle each day is a very small commitment, often it can be completed in 5 minutes. But some players wanted more, and so spinoffs began to appear.

For example, Quordle and Octordle ask players to solve 4 or 8 Wordle puzzles simultaneously, with each guess being applied to all puzzles. These games offer longer engagement each day, and also can involve more complex strategies.

Not all of these spinoffs were serious. MD5LE challenges users to guess a random 32 character hexadecimal MD5 hash in 6 guesses

But Semantle was the spinoff that inspired me. Semantle uses word2vec in order to calculate the semantic difference between each guess and the target word. Semantically similar in this context means that the words are used in similar contexts. Semantle challenges players to think about words in a different way. I was not very good at it, frequently getting stuck with no idea how to interpret the similarity scores I’d received, no idea where to go next.

For this reason, I was inspired to make my own version.

Idea

Some years prior I’d had a fleeting idea for a project to find English words that could be accurately phonetically represented in katakana, and to find words pronounced differently in English but identically in Japanese. Ultimately I never started this project, but while I was trying to determine how difficult it would be I’d been looking for open source English dictionaries. One of the options that came up in my searches was WordNet.

WordNet is a permissively-licensed database of English words and their semantic relations. Where word2vec can tell you that two words are frequently used in similar contexts, WordNet can tell you the nature of the relation between two words. For example, if they are antonyms.

WordNet is such a cool database that I knew I had to make a game out of it. But how?

Initially (taking inspiration from the word “net”) I had an idea for a game where you’d make a few guesses and try to encircle the meaning of the target word, then tighten the borders until you found the exact word. There were several flaws with this approach such as the difficulty to build it, but I think the most glaring flaw was that I couldn’t think of a way to represent the game state through emojis. Given the importance of shareable emojis in Wordle and popular spinoffs such Heardle, this was a dealbreaker.

The idea that I eventually came to was to give players “directions” from their guess through the shortest path of connected words in order to reach the target word. Kind of like a “six degrees of separation” concept. Each direction would describe the relation between a word and the next, but not reveal anything about the specific words.

And so Nettle was conceived.

A quick intro to WordNet concepts

Since Nettle is a game based around navigating WordNet, it’s necessary to get some basics about WordNet out of the way first.

The core concept of WordNet is not “words” like you might expect, but rather “synsets”. Since WordNet is concerned solely with the ways that meanings connect, WordNet does not connect individual words to each other, but connects collections of synonyms to each other. Words with the same spelling but different meanings will be in different synsets, whereas words with completely different spellings but a shared meaning will be in the same synset. Each synset does contain a list of the words which make it up, and each of these words is called a “lemma”.

Throughout this post (and indeed throughout Nettle’s code) I use the term “word” a lot, but WordNet also includes “collocations”, which are sets of words that commonly go together. For example, “electric motor” is recognised by WordNet even though it comprises two words.

WordNet links synsets together via pointers. Pointers describe the relationship between synsets.

Many English speakers will be familiar with the “antonym” relationship type, but many of the other relationship types recognised by WordNet are not so well known. For example, “member holonym” is certainly not a phrase I ever encountered in compulsory education.

In WordNet each relationship type is represented by a single character called a “pointer symbol”. These symbols aren’t always intuitive and, more importantly, they aren’t emoji. So one of my first tasks was to map which of these relationship types to include in Nettle and how to represent them.

Getting this mapping right is actually really important for gamefeel. If I include relationship types that aren’t intuitive or commonly understood by the player, it’ll be unclear how two words are related, and even once the answer is revealed it might feel unfair. But if I exclude relationship types that the player is familiar with, they’ll be confused as to why two words that they can discern a relationship between are not considered related by the game.

Also, for practical reasons which will be apparent later, I can only include relationships with a reflexive pointer, so any pointer symbols without a reflect were automatically disqualified.

| WordNet Pointer Symbol | Pointer Type | Reflect Type | Description | Example | Syntactic Categories | Nettle Emoji | Notes |

|---|---|---|---|---|---|---|---|

| ! | Antonym | Antonym | The related word has the opposite meaning of the current word. | Hate is an antonym of love. | noun, verb, adjective, adverb | 🚫 | |

| @ | Hypernym | Hyponym | The related word has a broader meaning that encompasses the meaning of the current word. | Fruit is a hypernym of banana. | noun, verb | 🔼 | |

| @i | Instance Hypernym | Instance Hyponym | Same as hypernym, but the current word refers to a specific entity. | Terrestrial planet is an instance hypernym of Earth. | noun | 🔼 | Merged with Hypernym. |

| ~ | Hyponym | Hypernym | The related word has a more specific meaning that’s included within the current word. | Rose is a hyponym of flower. | noun, verb | 🔽 | |

| ~i | Instance Hyponym | Instance Hypernym | Same as hyponym, but the related word refers to a specific entity. | Neil Armstrong is an instance hyponym of astronaut. | noun | 🔽 | Merged with Hyponym. |

| #p | Part Holonym | Part Meronym | The related word is something that contains the current word as a component. | Leg is a part holonym of foot. | noun | 🦵 | |

| %p | Part Meronym | Part Holonym | The related word is a component of the current word. | Headline is a part meronym of newspaper. | noun | 🦶 | |

| #m | Member Holonym | Member Meronym | The related word relates to something that contains instances of the current word. | Galaxy is a member holonym of star. | noun | 🌌 | |

| %m | Member Meronym | Member Holonym | The related word relates to an element that belongs to the current word. | State is a member meronym of country. | noun | 🌟 | |

| #s | Substance Holonym | Substance Meronym | The related word is something composed of the current word. | Wine is a substance holonym of grape. | noun | 🍷 | |

| %s | Substance Meronym | Substance Holonym | The related word is something that makes up the current word. | Ice is a substance meronym of glacier. | noun | 🍇 | |

| + | Derivationally related form | Derivationally related form | Words that are used in different parts of speech but have the same root and closely-related meaning. | Daily and day are derivationally related. | noun, verb | N/A |

Derivationally related forms specifically pertain to lemmas, but throughout most of the WordNet database they are specified at the synset level. For example, “muliebrity” is considered by WordNet to be derivationally related to “feminine”, because it’s in the same synset as “femininity”. This could result in confusing scenarios for players. Also the documentation says this only applies to nouns and verbs, but I’ve found it used for adjectives too. |

| = | Attribute | Attribute | Describes a relationship between a noun and an adjective that both represent the same concept. | Acknowledgement has an attribute of acknowledged. | noun, adjective | N/A |

Not consistently specified. E.g. “femininity” and “feminine” are related via “derivationally related form” and not “attribute”. Suffers from the same issue as “derivationally related form”. |

| ;c | Domain of synset - TOPIC | Member of this domain - TOPIC | Mathematics is a topic domain of prime. | noun, verb, adjective, adverb | N/A | Clues would be too broad to be useful | |

| -c | Member of this domain - TOPIC | Domain of synset - TOPIC | Programmer is a topic member of computer science. | noun | N/A | ||

| ;r | Domain of synset - REGION | Member of this domain - REGION | United States of America is a region domain of Social Security number. | noun, verb, adjective, adverb | N/A | Only specified sporadically through the WordNet dataset, which would be confusing to players. | |

| -r | Member of this domain - REGION | Domain of synset - REGION | Mate is a region member of Australia. | noun | N/A | ||

| ;u | Domain of synset - USAGE | Member of this domain - USAGE | Trademark is a usage domain of WordNet. | noun, verb, adjective, adverb | N/A | ||

| -u | Member of this domain - USAGE | Domain of synset - USAGE | The related word exemplifies the concept of the current word. | Cakewalk is a usage member of figure of speech. | noun | N/A | |

| * | Entailment | The current verb implies or requires that the related verb must have also been done. | Get the hang entails practice. | verb | N/A | No reflect type | |

| > | Cause | The current verb necessitates that the related verb must also happen. | Show causes see. | verb | N/A | No reflect type, only specified sporadically | |

| ^ | Also see | The current word has a loose connection to the related word. | Selfish, also see inconsiderate. | verb, adjective | N/A | No reflect type, so vague that it wouldn’t be an actionable clue | |

| $ | Verb group | Verb group | Verbs that are closely related in meaning but not equivalent. | Receive (come into possession of) and accept (receive willingly something given or offered) are in a verb group. | verb | N/A |

Largely redundant because these words are usually also closely connected via hypernym / hyponym relationships. Also a tricky concept to convey to players, and doesn’t clearly indicate the direction of the relationship. Just not worth it. |

| & | Similar to | Similar to | Adjectives that are not quite synonyms but are very close in meaning. | Fast is similar to rapid. | adjective | N/A | Only applied to adjectives and the game primarily relates to nouns. Technically this would be a good thing to consider, but it wasn’t a priority for me. |

| < | Participle of verb | Indicates a verb that is closely related to the current adjective. | I actually couldn’t find a single example of this in WordNet. | adjective | N/A | No reflect type, very rarely specified | |

| \ | Pertainym (pertains to noun) | Indicates a noun that is closely related to the current adjective. | Interject pertains to interjection. | adjective | N/A | No reflect type | |

| \ | Derived from adjective | Indicates an adjective that is closely related to the current adverb. | Quickly is derived from quick | adverb | N/A |

No reflect type On the online version of WordNet this seems to be merged with “pertainym”. |

There’s a community project called Open Multilingual WordNet which improves upon WordNet in many ways. In fact, it solves many of the issues mentioned in the table above. If I ever make a Nettle v2 I’ll probably move over to this dataset and be able to make some more of these concepts playable.

Network precalculation

Alright, so now that we’ve got our data source, the next missing piece is how we generate clues for the player. Given a guess word we should be able to provide a series of step-by-step directions to the target word.

Perhaps the most intuitive answer here is to implement a popular pathfinding algorithm, maybe something like A*. Certainly this was what came to mind first for me, along with some ideas around using PostgresSQL or perhaps a graph database. But ultimately my goal was to make this game freely available on the internet and capable of handling many simultaneous players. Additionally, since this is just a hobby project, it has to run on a shoestring budget.

There’s another option here which can yield better runtime performance, at the expense of much much higher upfront compute cost. What if for a given target word we precomputed and stored the shortest route from every possible guess? Then the runtime performance is a single hash table lookup.

And if we’re already planning to run an exhaustive calculation for every single node in the network, using A* to calculate the fastest route from every guess actually ends up being very far from the most efficient option. It gets beaten out by a more brutish method, a breadth-first blind search.

Let’s start at the destination word. If the user guesses this, they’ve won, we don’t have to return any directions. Let’s call any such words distance-0, since they have a distance of zero from the destination.

Now, from this starting point, let’s find every word that the destination word is connected to. We know that every single one of those words can reach the destination word in a single step. So their directions just represent that step to the destination. These are distance-1 words. For each of them, we’ll just record the direction to the destination.

Now from every one of those distance-1 words, let’s look at all their connected words. For each connected word, if it already has some directions attached to it then we know it already has a shorter route, so we can skip it. But for any connected words without directions attached, that’s a distance-2 word. In order to provide directions from a distance-2 word to the destination word, we can just link back to the distance-1 word that we came from.

We can then repeat this process until eventually there are no more connected words that don’t already have directions attached.

By working backwards from the destination like this, we can assign a complete set of directions to every word in the network, and we only have to visit each word a single time.

Because we build the directions backwards, starting at the destination and then finding every possible starting point, this also means that we follow every relationship in the opposite direction to how the player will be expected to traverse it. This is why we’re only able to consider relationships that have a reflexive relationship type.

Connecting homographs

You may have noticed a quirk in the wording of the description of distance-0 words above: that there can be multiple distance-0 words. This wasn’t a mistake.

Remember that Wordnet is organised in terms of synsets. Synsets have nothing to do with spelling, they’re all about meaning. But in Nettle, the player will make guesses based on a particular spelling that they input, they don’t have to specify the meaning. English is a language that contains many homographs, words that have the same spelling but different meanings. In order for the player to win they need to guess a certain spelling, but there might be multiple synsets associated with that spelling. All of these synsets have distance-0.

But this issue doesn’t just apply to destination words. For example, what if somebody types “basketball” as a guess and they are given the directions 🦶🔼🔼🔼🔼🔽🔽🔽🔽🔽🔽. This is not exactly a promising clue, but maybe they try to pursue it anyway. What’s a part of basketball? The ball, perhaps. So they enter “ball” as a guess.

Now imagine their surprise when they get the directions 🔽. In one step they managed to skip 10 other steps. Doesn’t this feel kind of arbitrary and not fun?

In order to overcome this, we can add an extra relationship that didn’t exist in the original WordNet dataset: homograph. This way, different words with the same spelling are never more than 1 direction apart, preventing confusion on the part of the user. Revisiting the example from before, instead of getting an 11 step clue, the player will now instead get 🔼🖋️🔽.

For those curious, that first long clue represents the following path: basketball🦶rebound🔼catch🔼touch🔼act🔼event🔽social event🔽function🔽party🔽dance🔽ball🔽prom

The shorter one with homographs is: basketball🔼ball🖋️ball🔽prom

Another advantage with connecting homographs like this is that it makes the network more densely connected at the layers of higher specificity. Without homograph connections, the network generally looks like a tree, with many clusters of related words but few links between these clusters. As you can see in the example above, clues often involve ascending the tree to more general and broad terms, and then descending back into specific terms in a different cluster. Adding homograph links adds a bunch of cross connections between these clusters, resulting in more varied clues.

Hopefully you can see the utility of the homograph connections, but how do we actually add them to the game? Do we have to fork WordNet? Do we read the entirety of WordNet and then store it in our own custom database so we can modify it as we please? Thankfully there’s an easier way.

We can build an abstraction layer in front of WordNet. All access to WordNet will go through this abstraction layer. Then, we can simply add in our relationships on the fly. This does have a performance cost, but remember that we’ll be doing all this work upfront due to the precalculation technique, so performance isn’t essential here.

Every time the abstraction layer is asked for a list of connections for a word, as well as checking the list of pointers in WordNet, it will also do a lookup for any other synsets with a spelling in common and return these as additional connections. And that’s all that’s required, this simple wrapper drastically changes the effective connectivity of the network.

async getConnections(word: Word): Promise<Array<Connection>> {

const pointerConnections = word.ptrs.map(ptr => new Connection(this, ptr));

const homographConnections =

(await this.lookup(word.lemma))

.filter(w => w.pos !== word.pos || w.synsetOffset !== word.synsetOffset)

.map(w => new Connection(this, w, Relationship.Homograph));

return pointerConnections.concat(homographConnections);

}One final note on homographs is that because the player makes a guess by entering a spelling, this also means that their guess can easily cover multiple synsets. In this case, we simply give them directions for whichever synset is closest to the destination word.

Prototype

And with our directions working, we’re now ready to build a playable prototype. It’s important to build a proof-of-concept prototype as early as possible, because the prototype might expose some fundamental impossibility, or it might just reveal that the game isn’t fun. It would be better to make such a discovery before investing many hours in the project. (This principle is referred to as “failing fast”)

In order to make a prototype for Nettle, I made a quick-and-dirty CLI interface to interface with the network:

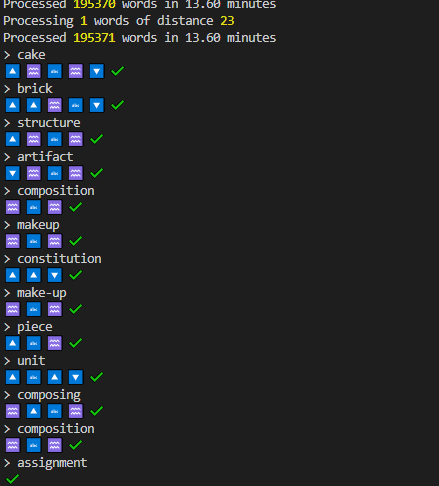

The prototype quickly revealed some major issues. Most of these were fairly simple to fix. For example, I’d accidentally mixed up some of the emojis so that they represented the opposite concept from what was intended.

I also discovered that I could change the order that words would be considered during the pregeneration stage, such that if routes of equal length existed between two words, the route which used more commonly-used words would be prioritised. This was a subtle change but it did seem to make the game a little more enjoyable.

I noticed some other things during prototype playtesting that I made a mental note of to address later:

- Sometimes the puzzle felt too hard, and I wanted to give up and just see the target word.

- Sometimes even if I dug around in the variables to view the target word, it was unclear how I was supposed to get there. In order for players to get a feel for how the game worked, I thought it was important to provide them with an option to see exactly what each direction was pointing them towards.

- Selecting random words from the entire WordNet dataset often yielded very obscure target words.

- Proper nouns aren’t much fun.

But there was one issue that was much more pressing…

Separating Synonyms

The relationship types built into WordNet are based on the meanings of words. But when I introduced the custom homograph relationship type, I had opened a can of worms. This association was no longer based on meaning, but based on spelling.

WordNet considers all words with identical meanings to be part of a single “synset”. So this means that as well as the homograph relationship pointing to words with the same spelling, it will also point to all the synonyms of those words. This was a massive problem and made the game confusing to the point of being unplayable. It needed to be fixed.

We already made an abstraction layer to sit in front of WordNet to handle homographs, can we extend that layer to handle this task too? You bet your lexicon we can!

There are a few steps to achieving this. Firstly, any time WordNet returns synsets to us, we need to split those out into lemmas. Each synset contains a synonyms property which contains all the spellings, we just need to clone the synset once for each spelling. Each resulting word object will not contain the synonyms array, but instead a single spelling string. We’ll also give each word object a list of other word objects that belong to the same synset.

private synsetsToWords(synsets: Array<Network.Synset>): Array<Network.Word> {

return synsets.flatMap(synset => {

const synonymWords = synset.synonyms

.map<Network.Word>(synonym => ({

gloss: synset.gloss,

pos: synset.pos,

synsetOffset: synset.synsetOffset.toString(),

ptrs: synset.ptrs,

spelling: synonym,

wordsInSynset: [],

}));

for(const synonymWord of synonymWords) {

synonymWord.wordsInSynset = synonymWords;

}

return synonymWords;

});

}Then we need to modify the lookup code. The lookup functions in WordNet are designed to take a spelling and return all synsets containing this spelling. Now that we’re converting each synset into a series of distinct words, if left unmodified the lookup function will also return a bunch of words that don’t match the provided spelling, due to belonging to the same synset as a word that does match the spelling. We can fix this by adding a simple filter to the lookup function.

lookup(searchText: string): Promise<Array<Network.Word>> {

return new Promise((resolve, reject) => {

this.wordnet.lookup(wordnetSpelling, (synsets: Array<Network.Synset>) => {

resolve(this.synsetsToWords(synsets).filter(w => w.spelling == searchText));

});

});

}(The actual code looks a bit more complicated than this because WordNet has multiple functions for filtering the lookup based on Part of Speech.)

This is enough to split out each spelling, but there’s one important extra step: linking those spellings back together. Synonyms are the strongest semantic links possible, so it’s extremely important to include these. In order to do so, we can just modify our getConnections function from earlier to also read from the list of words in the synset that we attached to each word object:

async getConnections(word: Network.Word): Promise<Array<Connection>> {

const pointerConnections = word.ptrs.map(ptr => new Connection(this, ptr));

const homographConnections =

(await this.lookup(word.spelling))

.filter(w => w.pos !== word.pos || w.synsetOffset !== word.synsetOffset)

.map(w => new Connection(this, w, Network.Relationship.Homograph));

const synonymConnections = word.wordsInSynset

.filter(w => w.spelling !== word.spelling)

.map(w => new Connection(this, w, Network.Relationship.Synonym));

return pointerConnections.concat(homographConnections).concat(synonymConnections);

}And that’s all it takes! Three simple tweaks in a wrapper and we’ve got a network with significantly more nodes and plenty of connectivity.

Monorepo

After iterating on the prototype for a while, I was able to get it to a point where I felt the game was already a fun challenge, and I had confidence that I’d be able to fix some of the remaining frustrations later into development. It was time to leave the prototype behind and commit to a full production-ready project.

This project would need several distinct components: a server component to serve up directions in response to guesses, a client app for the user to interact with, and a script for pregenerating the puzzles.

When I have separate components like this, I also like to make a library to define important types that will be shared between components. This cuts down on redundant definitions and also helps to ensure consistency in the contracts between components.

The server and generator components will both need to share some functionality for interacting with WordNet, but this functionality should not be on the client, so we’ll split this into another component. And that gives us 5 components total (server, client, generator, common, wordnet).

And now we come across what is, in my opinion, the most frustrating part of TypeScript: projects including multiple apps.

Theoretically TypeScript does support this scenario via the Project References feature.

In practice, this isn’t so straightforward. The TypeScript documentation describes using this for fairly simplistic use cases such as being able to build a src directory and a test directory separately. In this case the test project can reference the src project using a path like ../src/Index, and this reference will still work after the build process.

But in a more typical monorepo structure, we run into an issue where if we want to get from nettle-server/src/index.ts to nettle-common/src/Network.ts, we need a reference like ../../nettle-common/src/Network. And after the project has been built, this will cause issues because it’s still pointing to the src directory in nettle-common, which does not contain any JavaScript files. We can workaround this by updating our reference to say ../../nettle-common/dist/Network, but this loses us the benefits of Project References, such as many features of cross-project IntelliSense.

I tried many different TypeScript config settings to attempt to resolve this, but to no avail.

After spending far more time on the issue than I would’ve liked, I eventually gave up and used Lerna to implement the monorepo structure. Sadly Lerna also does not provide the TypeScript features of Project References, but I was actually able to find a workaround to bring back this functionality:

I duplicated the tsconfig.json file in each project to make a separate tsconfig.build.json file. I made the tsconfig.json files extend their respective tsconfig.build.json file. I updated the build process to refer to tsconfig.build.json, but the IDE still refers to tsconfig.json. This allows us to define TypeScript configuration options that will ONLY apply to the IDE, and not to the build process.

Then in the tsconfig.json files, I used the paths option to map the shared projects via the relative paths to their src directories.

For example:

tsconfig.build.json

{

"compilerOptions": {

"module": "commonjs",

"target": "es2022",

"sourceMap": true,

"strict": true,

"noImplicitAny": false,

"esModuleInterop": true

},

"exclude": [

"node_modules",

"dist"

],

}tsconfig.json

/* These settings only apply to the IDE, not to the build process */

{

"extends": "./tsconfig.build.json",

"compilerOptions": {

"baseUrl": ".",

"paths": {

"nettle-common": ["nettle-common/src"]

}

}

}For IntelliSense purposes, the IDE will follow the paths definition and load the raw TypeScript files from the src directory. But during the build process, TypeScript will follow standard module resolution, and Lerna will guide it to the appropriate build artifacts.

This kinda-sorta works. It still has some pretty big issues such as not allowing the use of breakpoints in dependency projects, but it’s the best solution I could find.

I ran lerna link convert to hoist all the common dependencies into the root project. But a caveat I noticed here is that it also hoisted ALL devDependencies, which I had not anticipated. This caused some mild unexpected issues, so I manually moved the devDependencies back to each child project.

I did also experiment with replacing Lerna with npm workspaces. But strangely enough, this prevented certain Angular from being able to install dependencies correctly, so I switched back to Lerna.

AWS SAM

When working on this project, I wanted to test my code locally in a way that would closely replicate the environment in which it would run on the cloud. I wanted to be able to debug the code with breakpoints and watches locally, but be confident that it would run the same way once deployed.

In order to achieve this, I opted to try out AWS Serverless Application Model. AWS SAM is an open source tool that provides an infrastructure-as-code format that simplifies the creation of applications using Lambda, API Gateway, DynamoDB, and other AWS serverless resources. This part didn’t really interest me too much because I can already do these things easily in Terraform.

But AWS SAM also includes a CLI tool which uses Docker to create a local environment that’s similar to the real AWS Lambda execution environment, enabling local debugging. This was the key feature that I was interested in.

Unfortunately AWS SAM proved to be a lot of trouble.

First, the “AWS Toolkit for VSCode” extension did not support the new AWS SAM TypeScript project template. This was understandable, as beta support for TypeScript in AWS SAM had only been announced 1 month prior, this was bleeding-edge functionality. This issue was easily circumvented by creating my project via the CLI.

Second, the extension has a bug which prevents building certain types of projects if the project is on a different logical drive to the VSCode installation. It’s always a strange experience to exactly copy the step-by-step instructions for the “Hello World” example and have them fail, and this bug took me a long time to diagnose. Working around this issue basically involved disabling the TypeScript features in AWS SAM, and using VSCode’s preBuildTask system to run the build in advance so that SAM would only be dealing with plain old JavaScript.

The third issue I encountered was related to dependencies. The manual build system that I had set up did not include repackaging of dependencies. Previously I had CodeUri set as dist/, matching some guides I’d found online. But this excluded the node_modules directory from the build package. I resolved this by setting CodeUri to ./. But this meant that the app.js file was no longer at the root of the package, so I also had to change Handler from app.lambdaHandler to dist/app.lambdaHandler in order to fix this.

The fourth issue I encountered was that during the packaging process, AWS SAM will not follow symlinks. This means that the final package will be missing any dependencies which are added via Lerna, npm link, or local path dependencies. Given that this project was using Lerna heavily, this was a major issue.

In desperation, I turned to Webpack. Webpack is a powerful tool for transpiling, combining, and minifying code. It can be configured to merge my entire application as well as all its dependencies into a single JavaScript file. Unfortunately, Webpack also has a few idiosyncrasies which mean that configuring it tends to feel nightmarish. In this instance my use case was fairly standard, so I had Webpack configured in no time.

But again, I ran into issues with SAM. One such issue is that aws-sam-webpack-plugin always outputs artifacts to a directory called “build”, but the AWS Toolkit plugin only supports launching from a directory called “output”. It was simple enough to add in an extra preBuildTask to rename the directory. Even stranger though is that the SAM CLI does not support the “output” directory, so we must only run this move operation when we’re debugging, not when we’re packaging for a live deployment.

A bigger issue was that the AWS Toolkit extension’s direct-invoke launch request ALWAYS runs the sam build CLI command before invoking the code. This command overwrites the Webpack output. The plugin doesn’t provide any configuration option to allow skipping the build command. But it does provide the option to provide custom build arguments… So I implemented a hacky workaround of adding --help as a custom build argument, which essentially turns the build command into a no-op.

And finally, despite my best efforts I was completely unable to get sourcemaps to work correctly.

Based on all of these issues, I would not recommend using AWS SAM. I’ll be writing about an alternative in a future post.

Summarising results

During playtesting I found that it was often taking >50 guesses to find the target word, and many guesses returned ~10 directions. This meant that if we simply included all the clues the user was given in the shareable results we’d end up with a wall of emojis.

In order to avoid this, I decided to write an algorithm for determining which of the player’s guesses are most interesting. More specifically, I wrote three algorithms.

Summary algorithm 1 - improvement guesses

The first algorithm is simple: any clue the player received which was shorter than all their previous clue should be included. This is the most obvious metric of progress. It also has the benefit that if the player succeeded in solving the puzzle, this will automatically include the winning guess, since a clue of length zero will be shorter than any other clue.

Initially this was the only metric used to generate the summary, but playtesting revealed that the results weren’t very interesting.

Summary algorithm 2 - longest guess

The second algorithm is even simpler: the longest clue the player received should be included. Why? Because I think it’s funny. Especially when this longest clue was received in the late-game.

Summary algorithm 3 - path analysis

The third algorithm is a little trickier, and addresses a limitation of the first algorithm.

Consider the following game:

| Guess | Clue |

|---|---|

| cake | 🔼🔽🖋️🔼 |

| home | 🔼🔼🔽🖋️🔼 |

| greeting | 🔽🖋️🔼🖋️🔼🔼 |

| marriage | 🌟🔼🖋️🔽🔽 |

| wife | 🔼🔼🖋️🔽🔽 |

| spouse | 🔼🖋️🔽🔽 |

| relationship | 🔼🔽🔽 |

| connection | 🔼🔽🔽 |

| relation | 🔽🔽 |

| ratio | ✅ |

With only the first two algorithms, this would yield the following summary:

Nettle #----: 🎉/10

1: 🔼🔽🖋️🔼

3: 🔽🖋️🔼🖋️🔼🔼

7: 🔼🔽🔽

9: 🔽🔽

10: ✅The series of clues that lead the player to the example start with “marriage” and “spouse”, but the clues for these are not included because they’re shorter than the clue for “cake”. (Which didn’t end up being useful to the player)

The third algorithm attempts to find the clues that were most useful to the player, the clues which the player followed to the solution (or to get close to the solution).

This was a daunting idea at first, but fortunately I had already lost my fear of algorithms. After thinking for a bit, I had an idea that I wanted to test out.

The idea is to identify a number of significant “waypoints”, and for each of these find all the guesses where the player made progress towards that waypoint, similar to strategy 1. In essence:

- Identify the most significant “waypoints”.

- Highlight any clue that was the shortest clue so far to include that root.

Now, step #1 here requires some elaboration. What do I mean by a waypoint? Consider this diagram of all the guesses that the player made and their relations to one another:

From this diagram, it seems pretty apparent that “relation” was the critical clue for solving the puzzle, and we should show progress the player made towards this word. But computers don’t have our common sense, so how can we write an algorithm to identify such waypoints?

We can exclude the solution (distance-0) word, because including it will merely yield the same results from strategy 1.

We need to put a limit on how many words can be considered waypoints, otherwise every clue would provide a waypoints, and so every single clue would be highlighted. I decided to consider 1 waypoint for every 25 guesses, rounded up. Games where the player made more guesses are likely to include more paths that the player was chasing down.

Now, the slightly trickier part: we’ve calculated our fixed number of waypoints to look for, but how do we prioritise which words are most significant, which ones helped the player the most?

Sadly, we don’t have any measurable insights into the player’s thought process, so it’s not possible for us to know which clues gave the player insight. We have to try to approximate this from what we do know.

- I prioritised waypoints that were closer to the target word, since the player’s goal is to find that target word and so nearby waypoints represent more significant progress towards the goal. A distance-6 word that the player wasted 12 guesses chasing is probably not as valuable as a distance-2 word that the player spent 8 guesses tracking down.

- Among waypoints of the same distance, I prioritised those that had more clues including them. This indicates that the player spent more time working towards them, and so the player likely considered them significant.

- If two waypoints had the same distance and number of clues, I prioritised waypoints that appeared later in the game. In the case where the player completed the puzzle, I think more recent clues were probably more relevant to that victory.

With my sorted list of roots, it was then simple to apply the same logic from the first algorithm to determine which clues made progress towards those roots and should be highlighted.

const numberOfCluesReferencingWord: {[address: string]: number} = {};

for(let i = 0; i < this.state.guesses.length; i++) {

const guess = this.state.guesses[i];

for(let i2 = 0; i2 < guess.clue.length; i2++) {

const word = guess.clue[i2];

const address = this.getAddress(word);

numberOfCluesReferencingWord[address] = (numberOfCluesReferencingWord[address] ?? 0) + 1;

}

}

const cluesWithCount = this.state.guesses

.filter(g => g.clue.length > 1)

.map((g, i) => {

const address = this.getAddress(g.clue[0]);

return {

index: i,

clue: g.clue,

address: address,

count: numberOfCluesReferencingWord[address],

};

});

const numberOfWaypointsToSearchFor = Math.ceil(this.state.guesses.length / 25);

const waypoints = new Set(cluesWithCount

.sort((a, b) => {

// Shorter clues should be prioritised as waypoints

if(a.clue.length != b.clue.length) {

return a.clue.length - b.clue.length;

}

// Words that are references by more clues should be prioritised as waypoints

const addressA = this.getAddress(a.clue[0]);

const addressB = this.getAddress(b.clue[0]);

const countA = numberOfCluesReferencingWord[addressA];

const countB = numberOfCluesReferencingWord[addressB];

if(countA != countB) {

return b.clue.length - a.clue.length;

}

return b.index - a.index;

})

.slice(0, numberOfWaypointsToSearchFor)

.map(c => c.address)

);

const shortestClueIncludingWord = new Map<string, number>();

for(var i = 0; i < this.state.guesses.length; i++) {

const guess = this.state.guesses[i];

if(guess.clue.length < 2) {

continue; // Exclude the answer (it will be a highlight from Strategy #1 anyway)

}

// Only include words relating to one of the identified waypoints

if(!guess.clue.find(c => waypoints.has(this.getAddress(c)))) {

continue;

}

if(guess.clue.find(c => (shortestClueIncludingWord.get(this.getAddress(c)) ?? Infinity) <= guess.clue.length)) {

continue;

}

const peekaheadLength = guess.clue.length > 3 ? 2 : 1;

const peekedWord = guess.clue[peekaheadLength];

shortestClueIncludingWord.set(this.getAddress(peekedWord), guess.clue.length);

highlightGuessIndexes.add(i);

}Now the same game yields this summary:

Nettle #----: 🎉/10

1: 🔼🔽🖋️🔼

3: 🔽🖋️🔼🖋️🔼🔼

4: 🌟🔼🖋️🔽🔽

6: 🔼🖋️🔽🔽

7: 🔼🔽🔽

9: 🔽🔽

10: ✅Creating the target word list

Each day we want every player to receive the same target word. In order to achieve this, we need to create a wordlist to assign a word to each day.

Picking random WordNet lemmas is not a great strategy for forming this wordlist, because many lemmas in WordNet are not exactly what the average person would consider a word, and many more are obscure jargon.

I scoured the internet for datasets of common words, and I eventually came across this list of word frequency data within Wikipedia articles. I took a few thousand of the most frequently used words as a starting point.

From playtesting, I knew that hunting for nouns was the most fun, as they tend to be better connected within WordNet. So I ran a script to look up each of the words in WordNet and see if they have a noun synset associated with them, producing a filtered list in the process.

Reviewing the filtered list, I found that there were still a lot of words in there that I didn’t consider to be nouns. These are cases where the orthographic word (spelling) is frequently used due to a common verb/adjective/adverb sense, but the noun sense is very uncommon. Since I only found frequency data for othographic words and not for individual lemmas, I wasn’t able to find an automated way to filter these out. So I bit the bullet and manually reviewed the list of 5,000 words and ended up with around 2,500.

Finally, I ran a script to shuffle the final wordlist, and I was careful not to look at the results. Why? Because I wanted to be able to play the game too, I wanted to avoid spoilers!

Improving speed of puzzle generation through caching

In order to easily consume the WordNet database, I used an npm library called wordpos. The README for this library describes it as fast, and brags that it’s 5x faster than the previous version. Conceptually it is just reading from a local database file, so I’d expect it to be fast. Based on this, I initially used the functions from this library naïvely every time I wanted to retrieve data from WordNet. This worked fine when I was generating a puzzle here and a puzzle there for testing purposes.

But you’ll recall that my plan was to pregenerate all puzzles in advance, and I have a target word list containing about 2,500 words. At this point in time, generating a single puzzle took almost 5 minutes. I didn’t particularly want to leave my computer running for 8 straight days in order to generate all the puzzles.

When I started digging into the performance, I quickly realised that essentially all the time was being spent within wordpos functions, reading from the WordNet database. Doubtless this was exacerbated by the extra reads caused by the homograph and synonym shims I’d written, but even discounting them it was clear: accessing WordNet is slow.

But each time we generate a puzzle we are retrieving data about all the same words (the entire network), just in a different order. Additionally, the dataset contains <200,000 records, and each record is fairly compact. This is a near-perfect use case for a simple in-memory cache.

With the cache in place, generating a puzzle is reduced from ~280 seconds, to ~50 seconds. This approach does increase memory usage significantly (Node uses about 1.5GiB while running the generator with the cache) but here the speed increase is worth it.

The future: making Nettle easier

I published Nettle and shared it with some friends, and we had a lot of fun competing.

Something that became apparent pretty rapidly though was that Nettle is hard. I suspect that cryptic crossword fans would enjoy it, but it’s not all that accessible for people who like more casual puzzle games.

I have spent some time thinking of ideas to create an “easy mode” for Nettle. These might include:

- A “map” feature: a network diagram that displays all of the clues obtained so far, any known words, and how each clue connects to others. When I play Nettle I try to construct a map like this in my head, but it adds cognitive overhead and it requires a lot of guesswork. Having the game do this for players would make things substantially easier.

- Sense explanations on each guess: when the player makes guesses, they provide the spelling of a word, they do not choose the meaning. Nettle will return a clue for whichever synset containing that orthographic word has the shortest path to the target word. This sometimes uses a sense of the word that’s not known or obvious to the player, making the clue very difficult to interpret. Providing an option to indicate which sense Nettle has used when interpreting each guess would probably reduce the amount of frustrating clues the player encounters.

- A “hint” system: if the player gets stuck, they could request a hint. They could choose any clue they’ve received, and the game would reveal the dictionary definition of the next word along.

I’ve since been busy with other projects, but I’m excited to return to Nettle some day and make it into something that can be enjoyed by a wider range of people.